Blog

Photo by

Gene Gallin on

Unsplash

Photo by

Gene Gallin on

Unsplash

How to Build a Pharmacy Locator Python Web Scraper | Nov 1st, 2023

In year 2021, I embarked on a front-end web development self-taught journey. In 2022 I decided to

advance to back-end web development. This is where I was exposed to databases and my interest

in data analysis was sparked.On researching the different paths to this new field, I discovered

Alex The Analyst on youtube and I 'joined' his Youtube Data Analysis Bootcamp.

On completing his web scraping with python 'class', I decided to challenge myself to work on

a personal project.

I was adventurous enough to check web scraping work posted on UpWork and I found the task below.

I thought wow $100 to scrape a website, this must be easy.But, I was very wrong.

Kindly allow me to take your through the following two weeks of my life as I worked on

the three 'easy' steps as listed on the task.

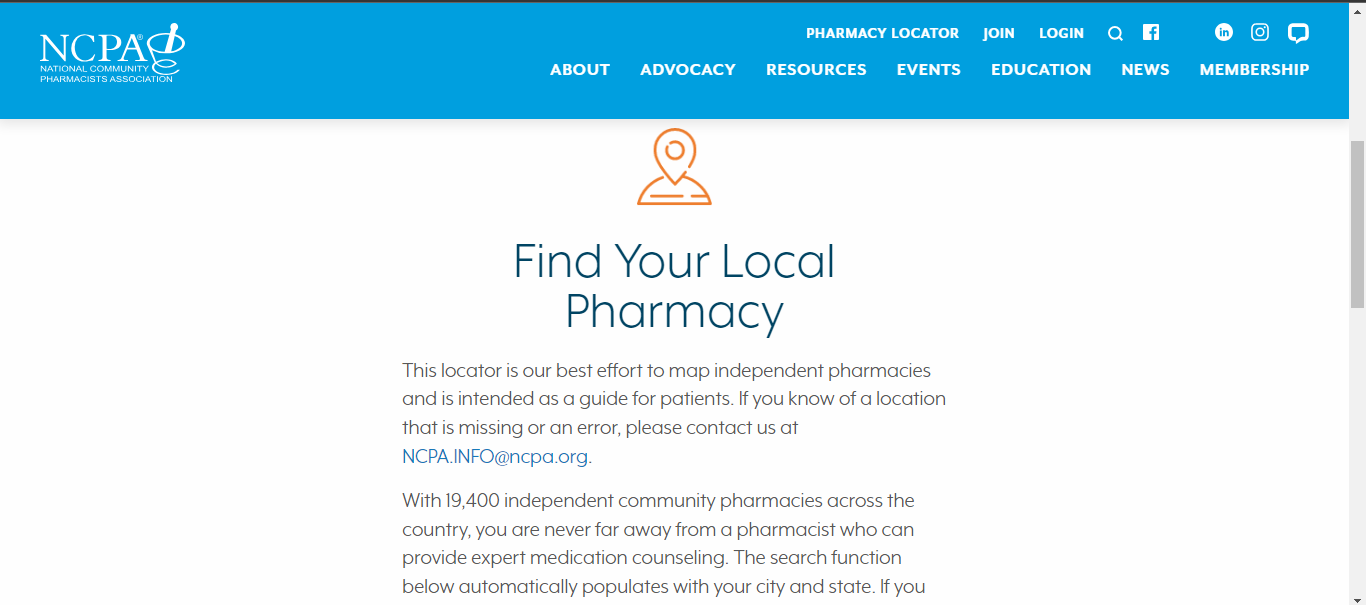

Step One: Go to this website: https://ncpa.org/pharmacy-locator

Note that it indicates that their database contains 19,400 pharmacies. I took that information lightly

until it was time to implement my project.

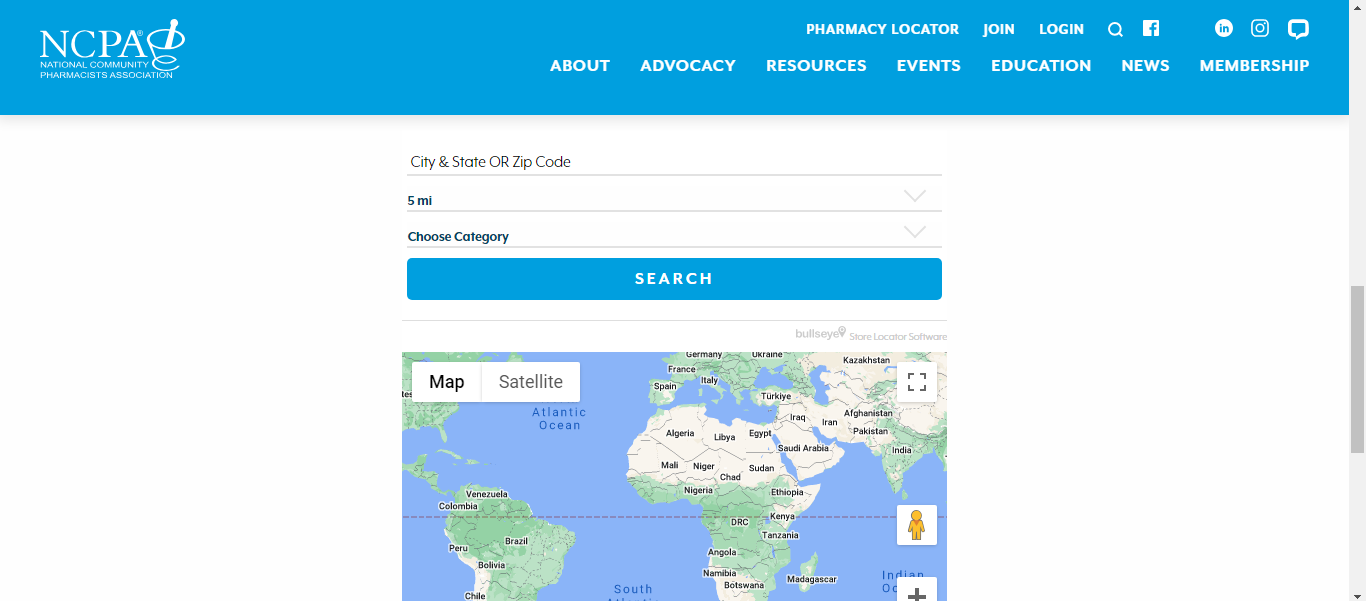

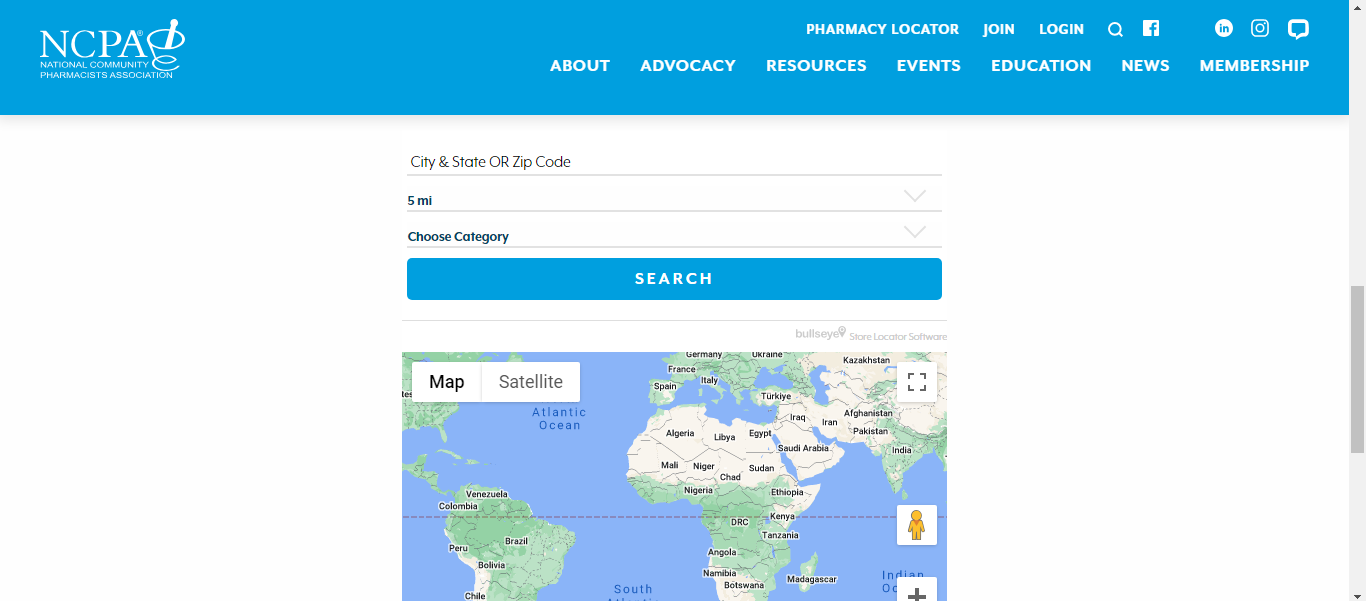

Please note that the user is required to input some information specifically City & State OR Zip Code,

choose the radius in miles from a dropdown, choose service category from another dropdown and

finally click on the search button. This takes us to the next step.

Step Two: Scrape from every zip code

On doing a quick search I quickly discovered that there were 41,704 ZIP codes ranging from 00501 to 99950.

Please do not be fooled by the familiar word range as I was. I thought that all I needed to do was to simply find all

the individual numbers within the ranges. I was wrong again. So being so very resourceful I looked for a website where I could

scrape the ZIP codes, easy right?

I used BeautifulSoup to scrape the ranges from the website and saved the results in an excel worksheet.

My intention was to use SQL to find the individual numbers within the ranges.

I had previously learnt how to use Microsoft SQL server on Alex's class but on doing a quick search

I realized that the function generate_series() would be the most efficient method to get the individual numbers.

The generate_series() function in SQL server cannot be used with select clause but PostgreSQL allowed the function

to be used with the select clause. So I downloaded PostgreSQL and googled how to use it on the fly.

The process was not easy but I learnt the pros and cons of PostgreSQL and had a hands-on experience.

I imported the excel worksheet to PostgreSQL which I found to be not as easy as it with SQL Server.

The function was actually very easy to use and I got a list of the populated ZIP codes from the ranges.

While testing the codes on the zip locator I realized that not all the derived numbers were valid as ZIP codes.

On further research I found a ZIP codes database set online.

I decided to use the available database set to scrape pharmacies for every zip code.

I quickly realized that since the task required user input BeautifulSoup would not be sufficient. But Selenium

proved to be more useful. I used Selenium's documentation, imported the necessary functionalities and

that leads us to the next step.

Step Three: Provide the available fields (pharmacy name, address, phone number, website, business hours, and services)

This was the most exciting part. With a background in web development, I found myself stuggling with how to

read global variable inside a function while coding with python. How to handle timeout errors and hence

preventing program crashes. The use of try except blocks instead of try catch blocks.

I used time.sleep() function to automate the process of scraping and recording the pharmacy details to a csv file.

Thank you for reading this far. If you would like to see the source code, have a look

here.